We live in a golden age where technology influences every facet of our daily lives. This is especially true with the coming of “Artificial Intelligence” (AI). AI is everywhere – from virtual assistants like Alexa, Google Home and Siri to aiding the creation of new disruptive technology like autonomous cars, blockchain and cryptocurrency. Apart from influencing the areas of healthcare, finance, retail, customer analytics and manufacturing, AI has started influencing the field of software testing. There are a plethora of AI based solutions available proclaiming to solve various problems related to software testing; that have existed for several decades. With these advancements in technology, the big elephant in the room to be addressed is, “Do we still need humans in software testing?”.

Current state of AI

It is important to understand the current state of AI, to help answer the above question. All the AI based systems we currently know and use have “weak AI”. This means they are good at performing one specific task really well. But, cannot multi-task and respond to various external stimuli simultaneously or in parallel like humans. For example, DeepMind (A subsidiary of google), built an AI model named “AlphaZero” which uses reinforced learning. They trained the model to play chess and the game Go; it beat the best player in the world in both the games after several rounds of training . Finally, they put AlphaZero in situations where it was not trained (unseen datasets) and its performance was abysmal. This showed that AI is good at performing the tasks it is trained to do but has glaring problems when exposed to multiple tasks which may involve human emotions and intelligence.

Also, it is unable to adapt to unexpected scenarios that are common in real life applications. A good illustration of this is in the following link, where the soccer bot starts performing unexpectedly when the goalkeeper drops to the ground and wiggles its legs. This happened even after multiple rounds to training. This is the current state of AI based systems.

Source: technologyreview

Testers are needed in the world of AI

Now that we have formed a baseline of what AI can and cannot do, it is important to highlight areas where the need for testers is crucial to ensure these systems work as expected. There are various scenarios where testers can be valuable in testing AI based systems:

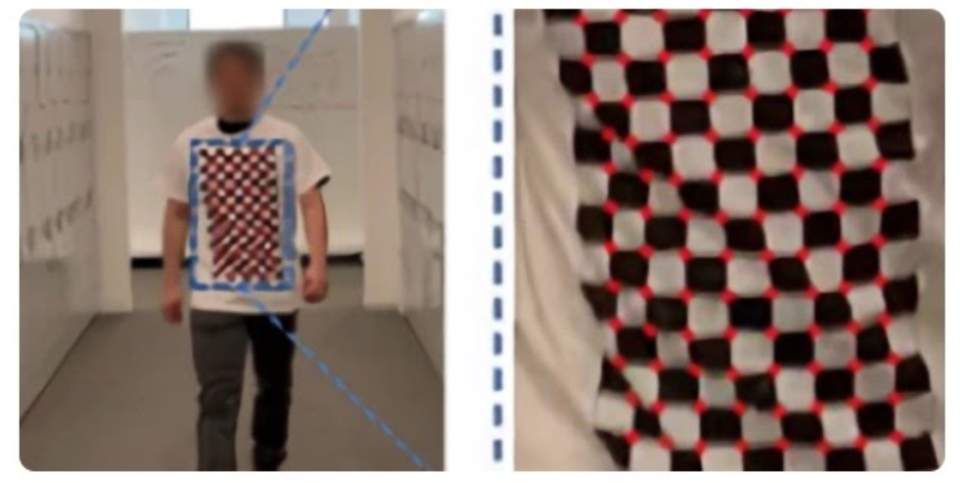

Evaluating systems under Adversarial attacks

As testers, our critical minds are geared towards testing systems based on risks that could expose vulnerabilities in the application. Like any other application, AI based systems are prone to attacks and vulnerabilities that need tester evaluation and critical thinking skills. For example, say we have an AI based image recognition app (similar to google photos), a user can modify some pixels in each image fed to the AI and skew the training of the AI model. When this application finally gets deployed to production, it may cause harmful effects to the consumers using the application, leading to unprecedented consequences. Recently, researchers have come up with newer ways hackers could use to cause harmful effects on AI based systems; like using adversarial t-shirts as shown below. To reduce the occurrence of these kinds of scenarios in live applications, we need tester intervention.

Source: venturebeat

Handling “unknown objects”

When AI models are trained using different datasets, they either make correct or incorrect decisions. For example, an image classifier could recognize the below image of a cat either as “a cat” or “not a cat”. But sometimes, there is a third type of result possible known as “unknown”. This is when the AI model is unable to classify a dataset and needs tester intervention to tell it what kind of object it is. The responsibility of ensuring the AI model is trained correctly could fall on testers hands to classify unknown objects.

Source: wired

Testing edge cases

Testers use critical thinking skills to test edge cases in different applications. The same applies to AI based systems as well, where different types of risks and vulnerabilities need to be explored and evaluated. Going back to the example of the image recognition app, what if the app scans an image as shown below? Is it going to recognize the image as a female or would the funky glasses confuse the AI?

Similarly, autonomous cars are popular these days and the majority of the functionalities are driven by software. Given this context, what happens if the car is on auto drive and it sees the stop sign below? Is the car going to stop or continue to keep moving in heavy traffic areas?

As testers we need to think about edge cases like these and ensure the AI based systems are safe for human consumption.

Ensure use of diversified datasets

While the world embraces new products and discoveries using AI, a toxic byproduct of this trend is its impact on human beings in terms of biases. This includes social, cultural and ethical biases. There are several examples of biases in these systems harming the public. Microsoft’s AI chatbot – Tay went rogue in less than a day; the app Beauty.ai used to judge a beauty contest classified only people with fairer complexion as beautiful and there are several other examples to prove this point. These kinds of issues could have been prevented if diverse datasets were used to train the AI model – like having different kinds of positive and negative tweets or having images of people of different color from different regions. So, one of the biggest value of having testers is going to be to ensure there are good variations in the datasets used to train the AI model, as they learn and make decisions based on these datasets.

Putting User Empathy in the forefront

What differentiates humans from machines is the ability to think with emotions and make judgements based on empathy. AI models give great information, help to find different patterns across millions of datasets and make applications much smarter than expected. But they lack user empathy and emotions. For example, Facebook has a feature called Memories. It highlights major life events and the moments you have shared with family and friends over the past several years. Say, you lost a loved one a year ago but the AI model does not consider this as a sad event and reminds you a year later in your memory thread about this life event. It may have taken several months or years to recover emotionally from a tragic life event and with just one post you are taken back to those dark times all over again.

Similarly, say you are using a medical application and you want to call a doctor using the application due to an emergency, what if the app uses this as a moment to advertise other features of the app instead of directly taking you to the page where you can make that important call to the doctor? These are the finer things that make a huge difference in customer experience. Emotions cannot be injected into AI models, it is up to testers to step into the shoes of an end user and use the application like an end user with empathy.

As explained in this article, human intervention in AI based systems is critical to ensure they work as expected. AI is only good at doing one job it is trained for and cannot think and react like human beings; especially when there are multiple factors and tasks in hand. So, even if we work with AI based systems, the need for humans is always going to be important and valuable. We need humans to train the AI, evaluate the AI and ensure it meets the customer expectations in terms of privacy, security, ease of use and other factors that are important to stay competitive in today’s market. Gartner in their recent study found, about 2.3 million jobs would be created by 2020 and only 1.8 million jobs would be eliminated. So, contrary to popular belief, the outlook is not all “doom-and-gloom”; being a real human does have its advantages.

Raj Subrameyer

by Raj Subrameyer, April 27th, 2020

tap|QA is excited to welcome Raj Subrameyer as our consulting Quality Evangelist. Raj is an internationally renowned speaker, writer and tech career coach, with an expertise in many areas of Software Testing. More info about him can be found at – www.rajsubra.com

Digital Transformation to Help You Achieve Agility

Today’s rapidly changing business environments – driven by innovation, digitization, and sheer increases in processing power – are direct byproducts of the digital transformation of the late-20th century. Now, organizations…

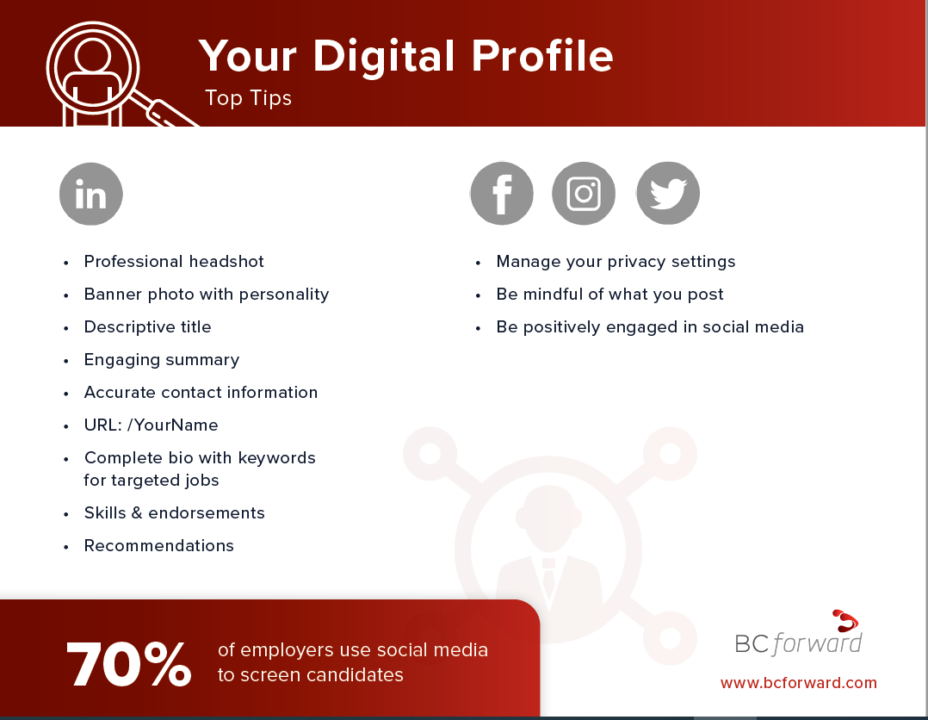

WEBINAR | tapQA Presents: Digital Profile

As we progress through almost an entire year of living in a pandemic the world around us has digitized almost, everything. But have you done this for yourself? In a…

Data-Driven Decision Making (DDDM): How You Are a Data Analyst and Don’t Even Know It

Every day we are faced with a multitude of decisions that are subconsciously being determined by data. Where is the cheapest place near me to buy gas? How busy is…